Overview

Welcome to the 3rd Workshop on AI for Content Generation, Quality Enhancement and Streaming at CVPR 2026

After the success of the 1st edition at CVPR 2024 and the 2nd edition at ICCV 2025, we continue to bring together researchers and practitioners in AI, streaming, and computer vision!

This workshop focuses on unifying new streaming technologies, computer graphics, and computer vision, from the modern deep learning point of view. Streaming is a huge industry where hundreds of millions of users demand everyday high-quality content on different platforms.

Computer vision and deep learning have emerged as revolutionary forces for rendering content, image and video compression, enhancement, and quality assessment.

From neural codecs for efficient compression to deep learning-based video enhancement and quality assessment, these advanced techniques are setting new standards for streaming quality and efficiency.

Moreover, novel neural representations also pose new challenges and opportunities in rendering streamable content, and allowing to redefine computer graphics pipelines and visual content.

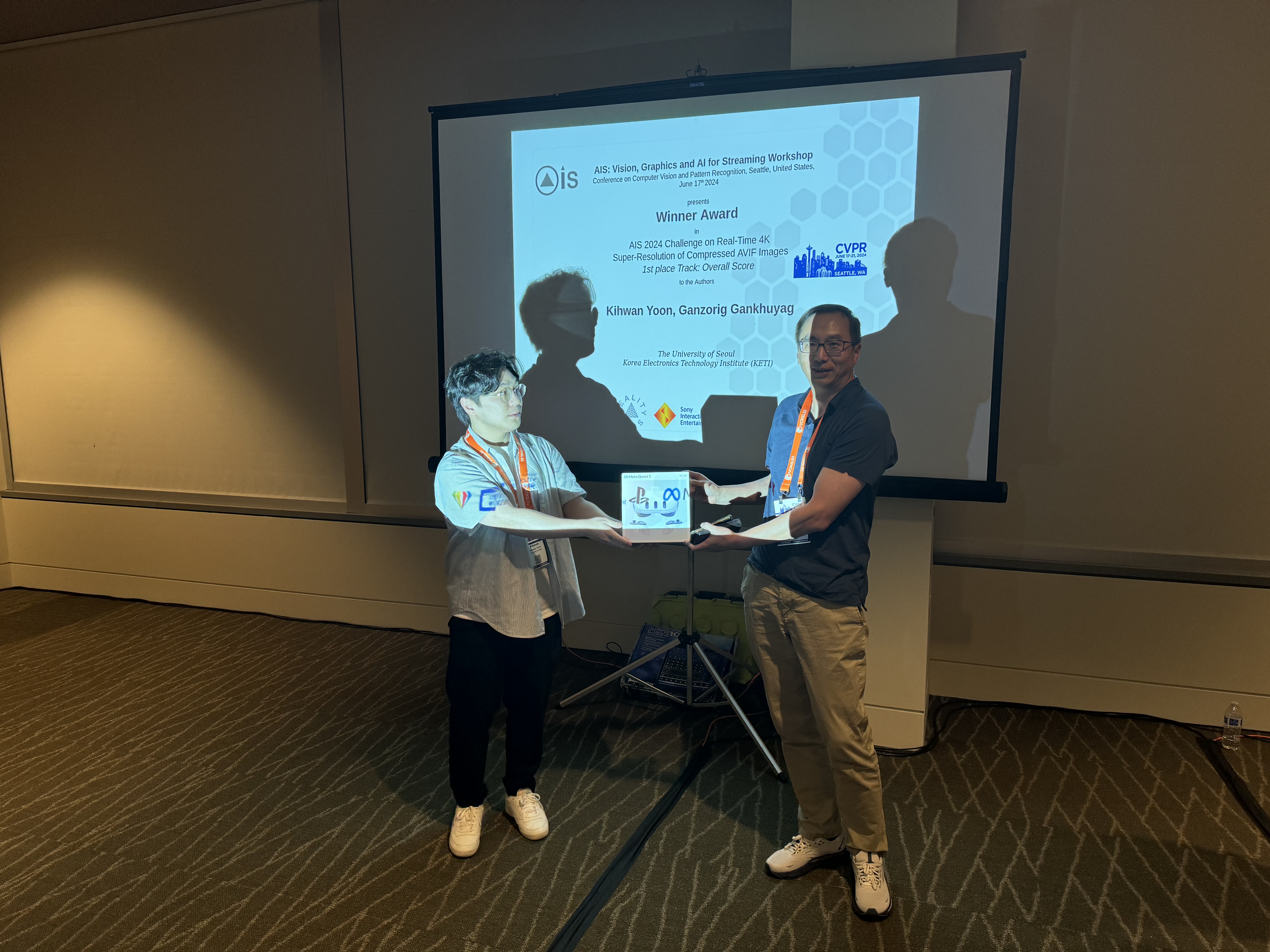

2024 Photo Gallery & Slides (CVPR)

Call for Papers

We welcome papers addressing topics related to VR, streaming, efficient image/video processing and compression. The topics include:- Efficient Computer Vision

- Model optimization and Quantization

- Image/video quality assessment

- Image/video super-resolution and enhancement

- Compressed Input Enhancement

- on-device & edge processing

- Generative Models (Image & Video)

- DeepFakes

- Vision Language Models

- Real-time Rendering

- Neural Compression

- Computer Graphics

Submission site: https://cmt3.research.microsoft.com/AIGENS2026

Submission Guidelines (Click to see)

- A paper submission has to be in English, in pdf format, and at most 8 pages (excluding references) following CVPR style. The paper format must follow the same guidelines as for all CVPR 2026 submissions (TBA)

- Dual submission is not allowed. If a paper is submitted also to another workshop or conference, and it is accepted, the paper cannot be published both at the ICCV and the workshop. If the paper is under review elsewhere, it cannot be submitted here.

- The review process is double blind. Authors do not know the names of the chair/reviewers of their papers. Reviewers do not know the names of the authors.

- Accepted papers will be included in CVPR 2026 conference proceedings.

Important Dates

| Paper submission deadline | 17th March, 2026 |

| Paper decision notification | 20th March, 2026 |

| Camera ready deadline | 10th April, 2026 |

CMT Acknowledgement (click)

The Microsoft CMT service was used for managing the peer-reviewing process for this conference. This service was provided for free by Microsoft and they bore all expenses, including costs for Azure cloud services as well as for software development and support.Challenges

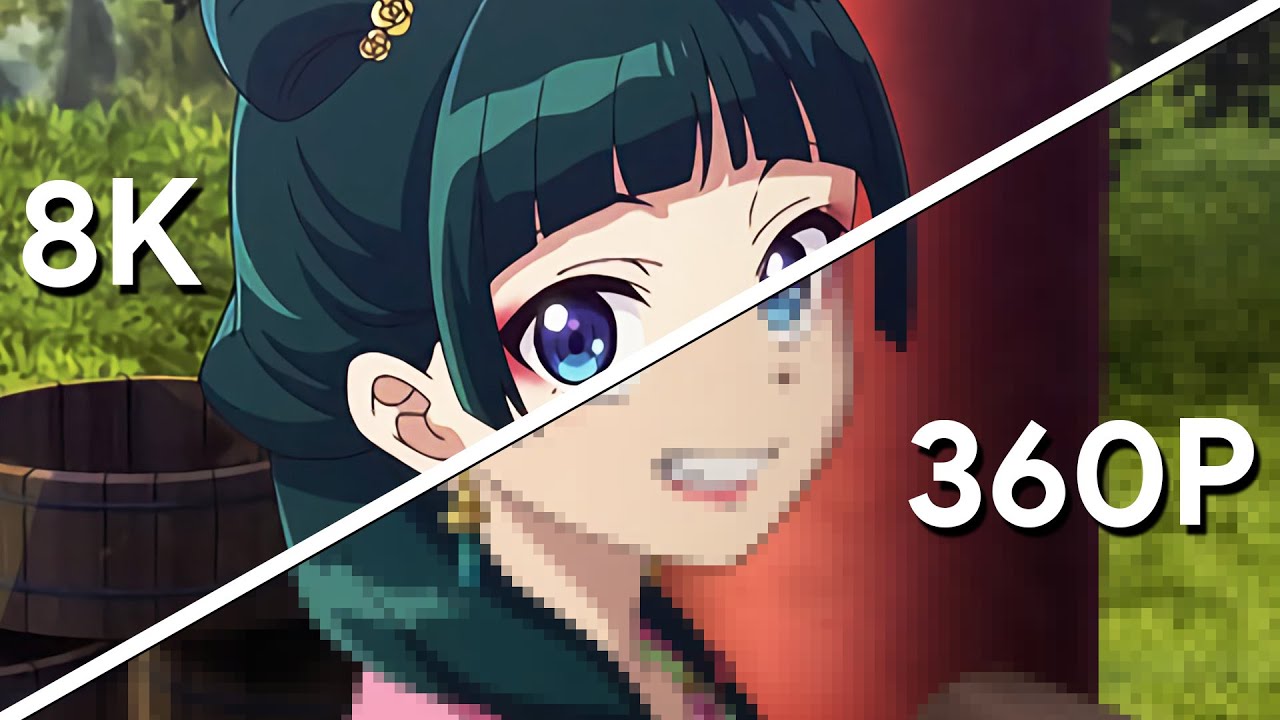

ReAnime Challenge: Anime Restoration and Upscaling

Data and Codabench submissions open soon. Stay tuned.

Invited Speakers (More to come)

Schedule (TBA)

Organizers

Program Committee and Advisors

Radu Timofte (University of Würzburg)

Eduard Zamfir (University of Würzburg)

Tim Seizinger (University of Würzburg)

Ioannis Katsavounidis (Meta)

Ryan Lei (Meta)

Cosmin Stejerean (Meta)

Heather Yu (Futurewei Technologies)

Zhiqiang Lao (Futurewei Technologies)

Ziteng Cui (University of Tokyo)

Shuhong Liu (University of Tokyo)

Lin Gu (Tohoku University)

Zhi Li (Netflix)

Ren Yang (Microsoft)

Varun Jain (Microsoft)

Saman Zadtootaghaj (Sony Interactive Entertainment)

Nabajeet Barman (Sony Interactive Entertainment)

Abhijay Ghildyal (Sony Interactive Entertainment)

Julian Tanke (Sony AI)

Takashi Shibuya (Sony AI)

Yuki Mitsufuji (Sony AI)

Fan Zhang (University of Bristol)

Shivansh Rao (GLASS Imaging)

Past Invited Speakers

Past/Present Sponsors, Organizers and Collaborators